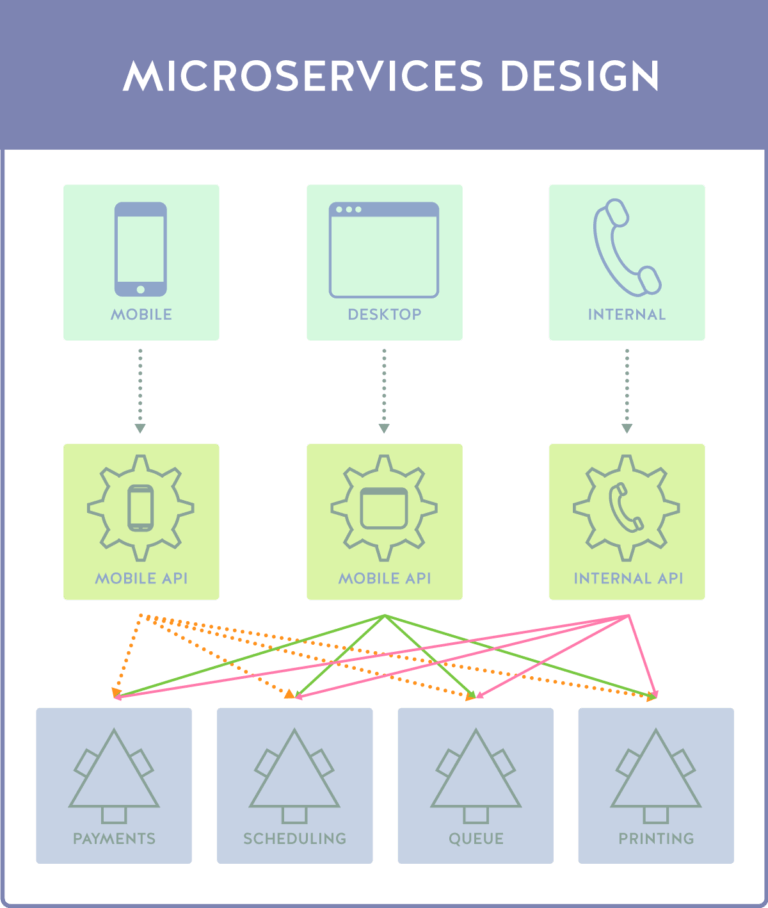

The digital landscape is a relentless arena, constantly demanding faster innovation, greater resilience, and the ability to effortlessly scale applications to meet unpredictable user demands. At the heart of meeting these challenges lies microservices design, a transformative architectural approach that has redefined how software systems are conceptualized, constructed, and deployed. This isn’t just about breaking down big problems; it’s about crafting finely tuned, independent units of functionality that collaborate to form robust and adaptive backends. By structuring an application as a collection of loosely coupled, autonomous services, each dedicated to a specific business capability, microservices design unlocks unparalleled advantages in scalability, agility, fault isolation, and maintainability. It’s the blueprint for building the dynamic, high-performing backends essential for today’s and tomorrow’s digital enterprises.

The Evolution of Software Backends: Why Microservices Now?

To truly appreciate the strategic imperative behind microservices design, we must understand the journey software architecture has taken, particularly in contrast to the once-dominant monolithic approach.

A. The Reign of Monolithic Systems: A Look Back

For many years, the monolithic architecture served as the default standard for building applications. In this model, an entire application is built as a single, indivisible unit where all its components – including user interface logic, complex business rules, and foundational data access layers – are tightly integrated and deployed as one large, often cumbersome, package. While offering initial simplicity for small-scale projects, monoliths rapidly become a source of significant challenges as applications grow in size and complexity:

- Deployment Nightmares: Even a minor code change in one part of a monolith necessitates a complete rebuild, recompilation, and redeployment of the entire application. This leads to excruciatingly slow release cycles, extended downtime windows for updates, and a dramatically increased risk of introducing regressions or unintended side effects across the vast, interconnected codebase. The act of pushing a small bug fix could become a high-stakes, all-encompassing event, draining developer resources and extending time-to-market.

- Scalability Roadblocks: Monolithic applications are notoriously inflexible when it comes to selective scaling. To handle increased user traffic or processing load, the entire system must be scaled horizontally (adding more servers) or vertically (upgrading a single server), even if only a tiny, isolated component is experiencing high demand. This ‘all-or-nothing’ approach leads to highly inefficient resource utilization and inflated infrastructure costs, as valuable computing resources are wasted on components that are underutilized or idle.

- Technological Stalemate: Introducing new programming languages, upgrading existing frameworks to their latest versions, or migrating to different database technologies within a monolith is an arduous, complex, and often prohibitive undertaking. The pervasive tight coupling makes it nearly impossible to innovate or adopt cutting-edge tools without risking widespread disruption to the entire application. This often leads to accumulated technical debt and an inability to leverage modern advancements that could provide significant competitive advantages.

- Development Bottlenecks: As development teams grow larger to tackle increasingly complex monolithic applications, coordinating efforts on a single, shared codebase becomes a logistical and communication nightmare. Merge conflicts, shared mutable states, and a lack of clear ownership over distinct functionalities inevitably lead to reduced individual developer productivity, frustrating delays, and a significantly slower overall pace of development.

- Catastrophic Failure Potential: A single point of failure, a critical bug, or an unhandled exception within just one component of a monolithic application can potentially trigger a catastrophic collapse of the entire system. This results in widespread service disruptions, significant business impact, and a profoundly negative experience for end-users, as the entire application becomes unavailable due to an issue in an isolated part.

B. The Rise of Microservices: Decentralizing the Backend

In powerful contrast, microservices emerged as a distributed and highly resilient response to these inherent limitations of monolithic designs. This architectural style champions the intelligent decomposition of an application into a suite of small, highly autonomous, and specialized services. Each distinct service in this paradigm adheres to a set of core principles that foster greater agility and robustness:

- Focused Business Capability: Every microservice is meticulously designed and implemented to encapsulate a specific, well-defined business capability. For instance, in a comprehensive e-commerce platform, instead of one giant application handling everything, you would find separate, dedicated services responsible for user authentication and authorization, managing the product catalog, handling sophisticated order processing, integrating with secure payment gateways, meticulously tracking inventory levels, and managing customer reviews. Each service becomes an expert in its narrow, well-defined domain.

- Operational Autonomy: A cornerstone of microservices is the absolute ability for each service to be developed, rigorously tested, deployed, operated, and even scaled entirely independently of all others. This critical independence ensures that changes, updates, or even unforeseen issues within one service do not ripple through or negatively impact other parts of the system, fostering true modularity and significantly reducing inter-dependencies between development teams.

- API-Driven Communication: Services interact with one another exclusively through explicitly defined, lightweight Application Programming Interfaces (APIs). These typically leverage well-established and efficient protocols such as HTTP/REST (for synchronous request-response interactions) or robust message queues for asynchronous, event-driven communications. This standardized interface promotes loose coupling and simplifies integration between diverse services, regardless of their internal implementation details.

- Technological Pluralism (Polyglot Capabilities): A profound advantage of microservices is the freedom for individual service teams to choose the most appropriate programming language, framework, and data storage technology for their specific service’s unique requirements. This ‘polyglot persistence’ and ‘polyglot programming’ approach empowers teams to leverage specialized tools, optimize performance for particular tasks (e.g., a highly concurrent service might use Go, while a data analysis service uses Python), and innovate without being shackled by a single, monolithic technology stack.

This decentralized, modular approach fundamentally reshapes how applications are built, fostering greater autonomy for development teams, significantly accelerating the entire development lifecycle, and profoundly enhancing the overall resilience, adaptability, and scalability of the software backend. It’s a strategic move from a single, rigid structure to a flexible, distributed ecosystem of collaborating services.

Foundational Characteristics of Microservices Design

Microservices are distinguished by several core characteristics that collectively define this architectural style and set it apart from traditional monolithic or even broader Service-Oriented Architectures (SOAs). These principles guide the design and implementation of effective microservices.

A. Loose Coupling: The Cornerstone of Agility

The principle of loose coupling is paramount in microservices design. Services are deliberately designed to have minimal, well-defined dependencies on each other. This means that modifications, updates, or even unexpected failures in one service should ideally not necessitate changes or redeployments in other services. This fundamental property is what enables independent development, separate deployment pipelines, and isolated scaling, dramatically reducing inter-team coordination overhead and accelerating overall development velocity. Changes within a service should primarily stay within that service.

B. High Cohesion: Focused and Self-Contained

Conversely to loose coupling between services, each individual microservice should exhibit high cohesion. This implies that all the internal elements within a service (e.g., its functions, data models, business logic) are functionally related and collectively contribute to a single, well-defined business capability. A highly cohesive service embodies the ‘single responsibility principle’ – it is designed to do one thing and to do it exceptionally well, encapsulating its specific domain logic and all associated data. This focus makes services easier to understand, develop, maintain, and test in isolation.

C. Independent Deployability: Unlocking Continuous Delivery

Perhaps the most powerful and transformative attribute, independent deployability, signifies that each service can be built, rigorously tested, and deployed to production environments entirely in isolation from other services. This critical capability is the bedrock upon which true Continuous Integration (CI) and Continuous Delivery/Deployment (CD) pipelines are constructed. It empowers development teams to release updates, bug fixes, and entirely new features frequently, with minimal risk, and without the laborious coordination required for a complex ‘big bang’ release of the entire application.

D. Decentralized Data Management: Data Autonomy for Services

Unlike traditional monoliths that often rely on a single, shared, and centralized relational database, microservices advocate for decentralized data management. This paradigm dictates that each service is the sole owner of its specific data store. This data store can vary widely, allowing teams to select the optimal database technology (e.g., a relational database for transactional data, a NoSQL document database for flexible schemas, a key-value store for caching, or a graph database for relationships) best suited for the service’s unique data characteristics and access patterns. This autonomy reduces data contention, allows for independent data schema evolution, and prevents a single database from becoming a performance bottleneck for the entire system.

E. Robust Fault Isolation: Enhancing System Resilience

A significant and critical benefit of the microservices approach is its inherent fault isolation capability. If a single microservice encounters an error, experiences a bug, or even completely crashes due to an unforeseen issue, its impact is typically confined to that specific service. The failure is localized, preventing it from cascading into a wider system-wide outage that affects unrelated functionalities. This robust fault tolerance dramatically improves the overall resilience, availability, and stability of the entire backend system, ensuring that core functionalities remain operational even during partial service degradations, leading to a much more reliable user experience.

F. Embracing Technological Pluralism: The Best Tool for the Job

The microservices architecture explicitly permits and even encourages technology heterogeneity. This means that different teams or even different services within the same overarching application can leverage distinct programming languages, frameworks, libraries, and data storage technologies. For instance, a high-performance, low-latency recommendation engine might be optimally written in Go or Rust with a specialized graph database, while a core content management service might be more efficiently developed in Python with a flexible document database. This flexibility empowers teams to select the ‘best tool for the job,’ optimizing performance, developer productivity, and leveraging specialized ecosystem features where they provide the most value, without compromising the entire system.

G. Alignment with Small, Autonomous Teams: Conway’s Law in Practice

Microservices design naturally aligns with and often drives a significant shift towards an organizational structure composed of small, autonomous, cross-functional teams—often famously referred to as ‘two-pizza teams’ (small enough to be fed by two pizzas). Each team typically gains end-to-end ownership of one or more microservices, encompassing everything from initial design and development through to rigorous testing, seamless deployment, and ongoing operation in production. This end-to-end ownership fosters a strong sense of responsibility, accelerates localized decision-making, and significantly improves communication and collaboration within the team, reflecting Conway’s Law, which states that organizations design systems that mirror their communication structures.

The Unrivaled Advantages of Microservices Design for Modern Backends

The strategic adoption of microservices design offers a compelling array of benefits that directly address many of the chronic limitations inherent in traditional monolithic systems, empowering organizations to build more adaptable, powerful, and future-proof backend software.

A. Unprecedented Granular Scalability

One of the most compelling and immediate benefits of microservices is their ability to deliver granular and highly efficient scalability. Instead of being forced to scale the entire backend application horizontally (by adding more servers to run a single, massive monolith), you can precisely and independently scale only the specific services that are experiencing high demand. For instance, in an e-commerce platform during a flash sale, only the order processing, payment gateway, and perhaps the inventory management services might need to scale up dramatically, while less trafficked services like user profile management or customer support remain at their baseline capacity. This intelligent, component-level scaling optimizes resource utilization, drastically reduces infrastructure costs, and ensures that computing resources are allocated precisely where they are needed most, eliminating wasteful over-provisioning.

B. Accelerated Agility and Rapid Time-to-Market

The independent development and deployment capabilities of individual services dramatically enhance an organization’s overall agility. Development teams can operate in parallel on distinct services without significant interdependencies or coordination bottlenecks that plague monolithic development. The smaller, more focused codebases of microservices are inherently easier to comprehend, modify, and test, leading to significantly faster development cycles, quicker identification and resolution of bugs, and the ability to perform more frequent, smaller, and inherently less risky releases. This accelerated pace of innovation empowers businesses to respond with unprecedented speed to evolving market demands, emerging competitive pressures, and changing customer preferences, thereby gaining a crucial competitive edge in the digital economy.

C. Superior Fault Isolation and Enhanced Backend Resilience

As previously highlighted, the decentralized nature of microservices inherently provides superior fault isolation. If a single service encounters an error, experiences a performance degradation, or even becomes completely unresponsive due to an unforeseen issue, its impact is typically confined to that specific service. The failure is localized, preventing it from cascading into a wider system-wide outage that affects unrelated functionalities. This robust fault tolerance significantly enhances the overall resilience, availability, and stability of the entire backend system, ensuring that core business functionalities remain operational even during partial service degradations, thereby providing a more consistent and reliable user experience for customers.

D. Unrestricted Technology Flexibility and Continuous Innovation

Microservices design liberates development teams from the rigid constraints of a single, monolithic technology stack. The ability to utilize different programming languages, frameworks, and data storage technologies for different services is a profound and empowering advantage. A team can rigorously select the most performant, efficient, or suitable technology for a particular service’s unique requirements. For example, a high-performance, low-latency recommendation engine might be optimally written in Go or Rust with a specialized graph database, while a core financial ledger service might be more efficiently developed in Java with a robust relational database. This flexibility fosters continuous innovation, allows teams to adopt new and emerging technologies more rapidly, and prevents technological obsolescence within the system.

E. Streamlined Maintenance and Simplified Debugging

Compared to navigating a vast, sprawling, and often interconnected monolithic codebase, the smaller, more focused codebases of individual microservices are considerably easier to understand, maintain, and debug. Developers can quickly pinpoint the source of an issue within a specific service without having to grasp the complexities and interdependencies of the entire backend application. This modularity dramatically reduces the time and effort required for troubleshooting, accelerates bug resolution, and simplifies ongoing system maintenance, significantly improving overall developer efficiency and job satisfaction.

F. Empowered Independent Teams and Organizational Scalability

Microservices design naturally facilitates and thrives within decentralized, autonomous team structures. Each small, cross-functional team gains end-to-end ownership of their assigned services, encompassing everything from initial design and development through to rigorous testing, seamless deployment, and ongoing operation in production. This ‘you build it, you run it’ philosophy fosters greater accountability, accelerates localized decision-making (as teams don’t need to wait for centralized approvals), and enables large organizations to scale their development efforts more efficiently without succumbing to bureaucratic overheads or communication breakdowns.

G. Enhanced Service Reusability Across the Enterprise

When designed with care, foresight, and a clear understanding of business domains, well-encapsulated microservices that provide common business capabilities can be reused across multiple distinct applications within an organization. For instance, a robust ‘User Authentication’ service, a ‘Notification’ service, or a ‘Payment Processing’ service could serve various internal dashboards, customer-facing web applications, and mobile apps. This promotes consistency across the enterprise, reduces redundant development efforts, and accelerates the development of new products and features by leveraging existing, proven, and battle-tested components, leading to a more efficient and cohesive backend development ecosystem.

Navigating the Inherent Challenges of Microservices Design

While the allure of microservices design is strong, offering numerous compelling benefits, organizations must acknowledge that this architectural style also introduces a new and distinct spectrum of complexities and challenges. A successful adoption of microservices is not merely a technical endeavor; it demands careful planning, the implementation of robust tooling, and often, a significant cultural and organizational shift.

A. Heightened Operational Complexity

Managing a highly distributed backend system comprising dozens, or even hundreds, of independent services is inherently more complex than operating a single, cohesive monolith. This increased operational burden manifests in several key areas that require dedicated attention and robust solutions:

- Deployment Orchestration Overhead: Manually deploying and coordinating updates across a multitude of independent services is simply impractical and highly prone to error. This necessitates the adoption of sophisticated deployment orchestration platforms, with Kubernetes having emerged as the de facto industry standard. However, mastering Kubernetes itself introduces a significant learning curve and requires specialized expertise in managing containerized workloads at scale.

- Distributed Monitoring and Logging: Collecting, aggregating, and effectively analyzing logs and metrics from disparate, independently running services becomes a non-trivial task. Understanding the overall system health, identifying performance bottlenecks, and pinpointing issues requires advanced, centralized logging solutions (e.g., the ELK Stack – Elasticsearch, Logstash, Kibana; Grafana Loki; or cloud-native services like AWS CloudWatch Logs, Azure Monitor Logs) and comprehensive monitoring tools that can provide a holistic view of the distributed backend.

- Intricate Distributed Tracing: When a single user request traverses multiple microservices, diagnosing performance bottlenecks or identifying the precise point of failure requires specialized distributed tracing tools. Without proper tracing (e.g., using OpenTelemetry, Jaeger, Zipkin), debugging can feel akin to searching for a needle in a haystack that’s scattered across multiple, geographically diverse servers, making root cause analysis incredibly difficult and time-consuming.

- Dynamic Service Discovery and Routing: In a microservices environment, service instances are constantly scaling up, scaling down, or even failing and being replaced. Services need a dynamic and reliable mechanism to find and communicate with each other in this fluid environment. Implementing robust service discovery (e.g., Consul, Eureka, or Kubernetes’ built-in service discovery) and efficient API routing becomes absolutely critical for seamless inter-service communication and external client access.

B. Overhead of Inter-service Communication

Communication between services over a network (rather than fast, in-memory function calls within a monolith) inherently introduces network latency and the ever-present risk of network failures. Designing resilient communication patterns is paramount to maintain backend system reliability and performance. This involves implementing robust retry mechanisms with exponential backoff, utilizing circuit breakers to prevent cascading failures to overwhelmed services, employing bulkheads to isolate service failures, and meticulously setting timeouts to prevent indefinite waits for unresponsive services.

C. Data Consistency Conundrums in Distributed Environments

With each microservice typically owning its own dedicated data store, maintaining data consistency across multiple services becomes significantly more challenging than in a traditional monolithic system that often relies on a single, ACID-compliant (Atomicity, Consistency, Isolation, Durability) relational database. Achieving strong transactional consistency across service boundaries in a distributed system is often impractical due to performance and availability trade-offs. Instead, developers must embrace concepts like eventual consistency and leverage sophisticated design patterns such as the Saga pattern for managing long-running distributed transactions. This requires careful architectural design and a deep understanding of different consistency models and their implications for business processes.

D. The Complexity of Distributed Transactions

Implementing true distributed transactions—where a single business operation spans and requires updates across multiple, independent services—is vastly more complex than handling simple ACID transactions within a monolith. Ensuring that all involved services either successfully commit their changes or gracefully roll back their respective changes in case of failure (a concept known as atomicity across services) requires sophisticated coordination mechanisms, often involving compensating transactions to undo partial operations, which adds significant complexity to the system design and implementation.

E. Enhanced Effort for Debugging and Troubleshooting

Debugging an issue that originates in one service and propagates its effects through several others can be significantly more challenging and time-consuming than debugging within a single, integrated application. Pinpointing the root cause requires not only robust logging and monitoring but also specialized distributed tracing tools to visualize the flow of requests and identify the exact service where a problem occurred. Without these tools, troubleshooting a microservices backend can quickly become a daunting and resource-intensive task, potentially leading to longer resolution times for production issues.

F. Potential for Higher Overall Resource Consumption

While microservices enable optimized granular scaling, running numerous small, independent services can sometimes lead to higher overall resource consumption when compared to a single, highly optimized monolithic application. Each service typically requires its own runtime environment, operating system overhead, and network overhead for inter-service communication. These individual overheads, when aggregated across dozens or hundreds of services, can potentially consume more resources than a highly optimized monolithic deployment, especially if not managed efficiently with technologies like containers or serverless functions. Careful resource management and optimization are crucial.

G. Increased Complexity in Testing Strategies

While unit testing individual microservices is often simpler due to their focused nature, integration testing and especially end-to-end testing a complete microservices-based system can be considerably more complex. It requires careful orchestration of multiple services, managing their dependencies, and simulating realistic communication patterns and failure scenarios. This often involves setting up complex test environments, managing distributed test data, and ensuring comprehensive test coverage across service boundaries, adding significant effort to the quality assurance process.

H. Profound Organizational and Cultural Transformation

Adopting microservices design is not merely a technical decision; it demands a significant organizational and cultural transformation. Traditional, siloed teams often struggle in a microservices environment. Teams must evolve from being narrowly focused to becoming more autonomous, cross-functional units. This necessitates embracing DevOps practices, fostering a ‘you build it, you run it’ mentality (where teams are responsible for their services from development to production operation), and a willingness to adapt processes, communication patterns, and acquire new skill sets across the entire engineering organization. Without this cultural alignment, the technical benefits of microservices may never be fully realized.

Best Practices for Successful Microservices Design and Implementation

To successfully harness the immense power of microservices and effectively mitigate their inherent complexities, organizations should rigorously adhere to a set of proven best practices. These guidelines are crucial for designing, developing, and operating microservices-based backend systems efficiently and securely.

A. Meticulously Define Clear Service Boundaries

The initial and arguably most crucial step in a microservices journey is to identify core business capabilities and then meticulously define clear, cohesive, and independent service boundaries. Avoid the common pitfalls of creating ‘nanoservices’ (services that are too small, leading to excessive communication overhead and distributed complexity without sufficient benefit) or ‘mini-monoliths’ (services that are too large and encapsulate too many responsibilities, negating many of the microservices benefits). Leveraging principles from Domain-Driven Design (DDD) is highly recommended to guide this decomposition process, ensuring services align with distinct business domains and bounded contexts, thereby fostering genuine encapsulation and autonomy.

B. Architect for Inherent Independent Deployability

Design every service with the explicit goal of independent deployability. This means minimizing any shared libraries, common codebases, or components that could inadvertently create tight coupling or deployment dependencies between services. Heavily utilize containerization technologies (e.g., Docker) to package each service and its specific dependencies into portable, isolated units. Furthermore, robust orchestration platforms like Kubernetes are essential for automating the deployment, scaling, healing, and overall management of these containerized services at scale, providing a consistent operational environment for your backend services.

C. Embrace Decentralized and Owned Data Management

Empower each service to own and manage its dedicated data store. This is a fundamental principle that prevents database-level coupling. Resist the strong temptation to share databases across services. Embrace polyglot persistence where appropriate, intelligently selecting the optimal database technology (e.g., relational, NoSQL document, key-value, graph, time-series) for each service’s specific data characteristics and access patterns. For handling distributed transactions and maintaining consistency across services, diligently apply patterns like eventual consistency and the Saga pattern, ensuring a deep understanding of their implications for data flow and system behavior in a distributed backend.

D. Implement Robust, Resilient Communication Mechanisms

For synchronous inter-service communication (e.g., request-response patterns), favor lightweight, high-performance protocols like RESTful APIs over HTTP/2 or gRPC. For asynchronous, event-driven interactions, which promote even looser coupling and better scalability, leverage robust message queues (e.g., RabbitMQ, Amazon SQS, Azure Service Bus) or powerful event streaming platforms (e.g., Apache Kafka, Amazon Kinesis). Crucially, embed resilience patterns such as retries with exponential backoff, circuit breakers (to prevent cascading failures to overwhelmed services), bulkheads (to isolate resources for different dependencies), and meticulously set timeouts in all service-to-service communication to ensure robustness and graceful degradation in a dynamic, distributed backend environment.

E. Prioritize Comprehensive Observability

Invest significantly in building a robust observability strategy encompassing logging, monitoring, and distributed tracing. Implement centralized logging solutions (e.g., the ELK Stack – Elasticsearch, Logstash, Kibana; Grafana Loki; or cloud-native services like AWS CloudWatch Logs, Azure Monitor Logs) to aggregate and analyze logs from all services. Utilize powerful monitoring tools (e.g., Prometheus with Grafana, Datadog, New Relic) to collect, visualize, and alert on metrics related to service health and performance. Adopt distributed tracing frameworks (e.g., OpenTelemetry, Jaeger, Zipkin) to gain end-to-end visibility into how requests flow across multiple backend services, which is indispensable for diagnosing issues, understanding latency, and optimizing performance in complex distributed systems.

F. Automate the Entire Software Delivery Lifecycle

Automate everything possible across your software development lifecycle. Establish mature Continuous Integration (CI) and Continuous Delivery/Deployment (CD) pipelines for automated building, rigorous testing, and seamless deployment of each individual service. Leverage Infrastructure as Code (IaC) tools (e.g., Terraform, Ansible, Pulumi, AWS CloudFormation, Azure Bicep) to programmatically provision, configure, and manage your underlying infrastructure resources for the backend. Comprehensive automation drastically reduces manual errors, accelerates release cycles, ensures consistency across various environments (development, staging, production), and frees up valuable developer time.

G. Implement an Effective API Gateway Layer

Utilize an API Gateway as the single, unified entry point for all external clients (e.g., web browsers, mobile applications, third-party integrations) to your microservices backend. The API Gateway can intelligently handle a wide range of cross-cutting concerns such as authentication, authorization, rate limiting, request routing to the appropriate backend microservices, and potentially even request aggregation or transformation. This approach simplifies client application development by providing a stable interface and offloads these common responsibilities from individual microservices, allowing them to remain focused on their core business logic.

H. Build for Inherent Resilience and Self-Healing

Beyond just robust communication, design each service to be inherently resilient and capable of self-healing. Implement circuit breakers to prevent a failing downstream service from overwhelming an upstream caller by quickly failing instead of hanging. Use intelligent retry mechanisms with exponential backoff and jitter for transient network or service failures. Design services to degrade gracefully when dependent services are unavailable, ensuring core functionalities remain accessible where possible, perhaps by providing cached data or partial responses instead of a full outage. Proactive health checks and automated restarts for unhealthy instances are also crucial for maintaining backend stability.

I. Cultivate a Strong DevOps Culture

Successfully adopting microservices design fundamentally requires fostering a strong DevOps culture throughout the organization. This means breaking down traditional silos between development and operations teams, promoting deep collaboration, shared responsibility, and continuous feedback loops. Empower teams to take end-to-end ownership of their services—from writing the code to operating and maintaining it in production, including monitoring and incident response. This cultural shift is paramount for achieving the agility, quality, and operational excellence that microservices promise.

J. Start Small and Iterate Incrementally

Avoid the common and often disastrous pitfall of a ‘big bang’ re-architecture where you attempt to convert an entire monolithic application to microservices all at once. Instead, begin your microservices journey with a small, less critical component of your existing application, or embark on a new greenfield project using microservices. Learn from your initial experiences, gather feedback, iterate on your processes and tooling, and then gradually expand the microservices adoption across your organization. The Strangler Fig Pattern is an excellent strategy for incrementally migrating existing monolithic applications to a microservices architecture, reducing risk and allowing for continuous learning and adaptation without disrupting the core backend.

The Dynamic Future of Microservices Backends

The microservices ecosystem is a vibrant, dynamic, and continually evolving domain, constantly shaped by advancements in cloud computing, containerization, sophisticated orchestration technologies, and new architectural patterns. Several key trends are poised to define its trajectory in the coming years:

A. The Rise of Serverless Microservices

The powerful convergence of microservices with serverless computing (Functions as a Service – FaaS) is gaining significant momentum. This powerful synergy allows developers to deploy even smaller, more granular functions that represent individual microservices, with cloud providers (like AWS Lambda, Azure Functions, Google Cloud Functions) handling all the underlying infrastructure management, automatic scaling to zero and beyond, and ‘pay-per-execution’ billing. This trend promises to further reduce operational overhead and accelerate deployment for certain types of event-driven backend workloads, blurring the lines between these two powerful paradigms and enabling extremely efficient resource utilization.

B. Pervasive Adoption of Service Meshes

Service meshes (e.g., Istio, Linkerd, Consul Connect) are rapidly becoming an indispensable infrastructure layer for managing the inherent complexities of inter-service communication in large-scale microservices environments. They provide a dedicated control plane that abstracts away cross-cutting concerns like traffic management (intelligent routing, load balancing, canary deployments), enhanced security (mutual TLS, access control policies), comprehensive observability (automated metrics collection, distributed tracing), and advanced fault tolerance (retries, circuit breakers) directly at the network level. This allows developers to focus purely on business logic while the mesh handles the complexities of the distributed backend network.

C. Dominance of Event-Driven Architectures

There’s an increasing and strategic shift towards highly event-driven architectures as a preferred communication pattern within microservices ecosystems. By leveraging asynchronous communication via robust event streams and message brokers (e.g., Apache Kafka, RabbitMQ, NATS, Amazon Kinesis), services become even more loosely coupled, communicate without direct knowledge of each other, and can react to state changes in real-time. This paradigm facilitates building highly responsive and resilient reactive backend systems, supports complex workflows across distributed services without tight dependencies, and enables greater scalability and data propagation across the system.

D. Evolution of Enhanced Observability Tools

As backend systems become exponentially more distributed and dynamic, the demand for increasingly sophisticated observability tools (going far beyond basic monitoring and logging) will continue to accelerate. This includes advancements in end-to-end distributed tracing that provides richer context and lower overhead, AI-powered anomaly detection for proactive issue identification and predictive maintenance, and integrated platforms that offer a unified, holistic view of system health, performance, and security across hundreds or thousands of services. The goal is to provide clear, actionable insights into complex backend interactions.

E. Focus on Developer Experience (DX) and Platform Engineering

The industry is placing a renewed and intense emphasis on significantly improving the developer experience (DX) for building, testing, and deploying microservices. Expect to see continued innovation in platform engineering, where internal platforms are built to simplify common microservices patterns, offer robust local development environments that accurately mimic production, provide intuitive configuration management, and streamline the entire inner loop of microservices development. The goal is to make developing with microservices as productive and enjoyable as possible, reducing the cognitive load on individual backend developers and accelerating feature delivery.

F. Integration of Machine Learning and AI in Backend Logic

Machine Learning (ML) and Artificial Intelligence (AI) are no longer siloed projects but are becoming integral components of modern backends. Microservices design facilitates the seamless integration of ML models as dedicated services, allowing them to scale independently and interact with other business logic components via APIs or event streams. This also drives the need for MLOps (Machine Learning Operations) patterns within microservices, ensuring that models are deployed, monitored, and retrained effectively within the distributed backend environment.

Conclusion

Microservices design represents a powerful, evolutionary shift in how backend software is conceptualized and delivered, enabling organizations to build highly scalable, profoundly resilient, and exceptionally agile applications. By intelligently breaking down complex monolithic systems into independent, manageable, and specialized services, it empowers development teams to accelerate their pace of innovation, choose the most suitable technologies for specific tasks, and achieve unprecedented levels of flexibility and continuous improvement.

While the journey to a fully realized microservices architecture presents its own set of challenges—from increased operational complexity and intricate data consistency concerns to the need for a significant cultural transformation—these hurdles are increasingly being addressed by maturing tooling, evolving best practices, and a growing body of collective industry experience. Organizations that strategically embrace and diligently implement microservices design are positioning their backends for long-term success, gaining a crucial competitive advantage in a fast-paced digital landscape. The future of IT infrastructure and application backends is undeniably distributed, and microservices are the definitive blueprint for building robust, adaptable, and future-proof digital solutions.